All my concerns about hydrogen #hopium, in one convenient place!

Hydrogen is being sold as if it were the “Swiss Army knife” of the energy transition. Useful for every energy purpose under the sun. Sadly, hydrogen is rather like THIS Swiss Army knife, the Wenger 16999 Giant. It costs $1400, weighs 7 pounds, and is a suboptimal tool for just about every purpose!

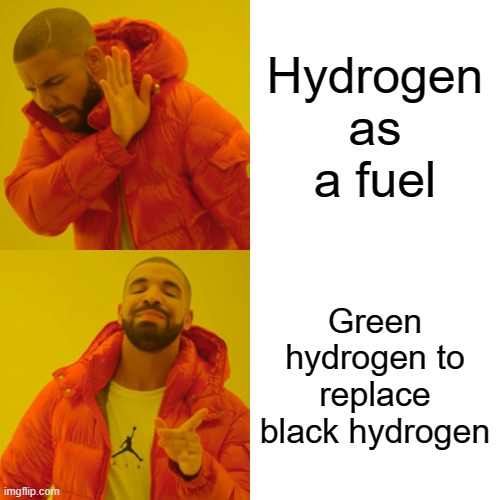

Why do you hate hydrogen so much? I DON’T HATE HYDROGEN! I think it’s a dumb thing to use as a fuel, or as a way to store electricity. That’s all.

I also think it’s part of a bait and switch scam being put forward by the fossil fuel industry. And what about the electrolyzer and fuelcell companies, the technical gas suppliers, natural gas utilities and the renewable electricity companies that are pushing hydrogen for energy uses? They’re just the fossil fuel industry’s “useful idiots” in this regard.

https://www.jadecove.com/research/hydrogenscam

If you prefer to listen rather than read, I appeared as a guest on the Redefining Energy Podcast, with hosts Laurent Segalen and Gerard Reid: episodes 19 and 44

https://redefining-energy.com/

…or my participation in a recent Reuters Renewables debate event, attended by about 3000

This article gives links to my articles which give my opinions about hydrogen in depth, with some links to articles by others which I’ve found helpful and accurate.

Hydrogen For Transport

Not for cars and light trucks. The idea seems appealing, but the devil is in the details if you look at this more than casually.

https://www.linkedin.com/pulse/hydrogen-fuelcell-vehicle-great-idea-theory-paul-martin/

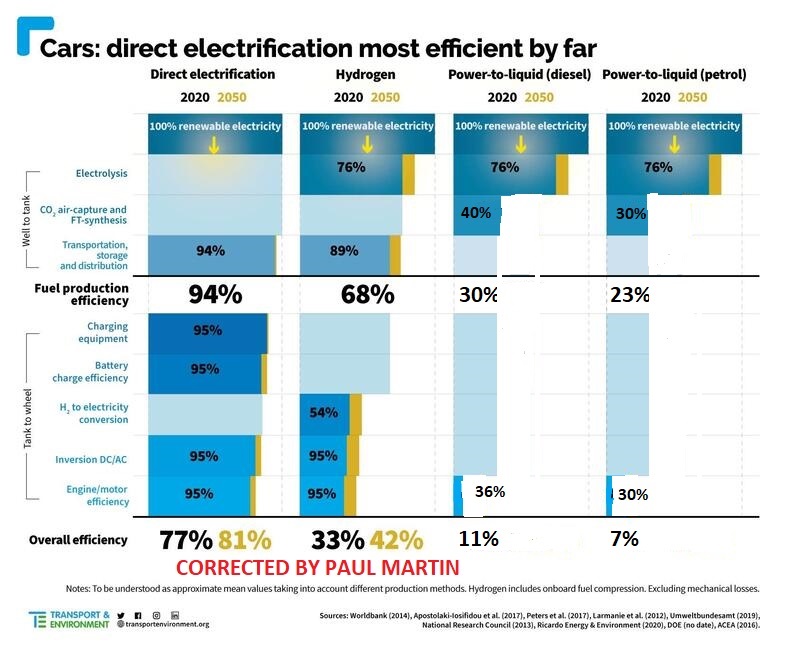

When you look at two cars with the same range that you can actually buy, it turns out that my best case round-trip efficiency estimate- 37%- is too optimistic. The hydrogen fuelcell car uses 3.2x as much energy and costs over 5.4x as much per mile driven.

https://www.linkedin.com/pulse/mirai-fcev-vs-model-3-bev-paul-martin/

What about trucks? Ships? Trains? Aircraft?

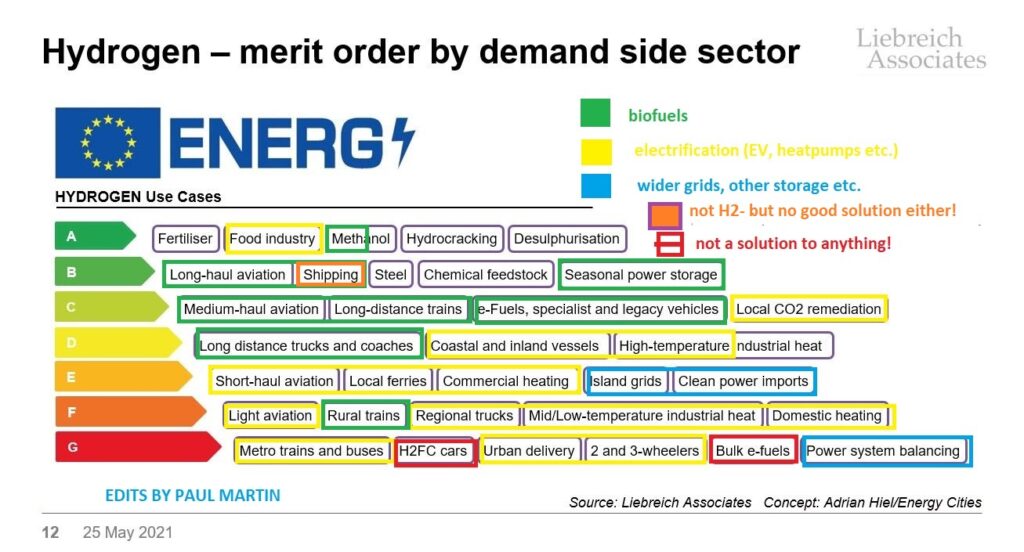

For trucks- I agree with James Carter- they’re going EV. EVs will do the work from the short range end of the duty, and biofuels will take the longer range, remote/rural delivery market for logistical reasons. Hydrogen has no market left in the middle in my opinion.

Trains: same deal.

Aircraft? Forget about jet aircraft powered by hydrogen. We’ll use biofuels for them, or we’ll convert hydrogen and CO2 to e-fuels if we can’t find enough biofuels. And if we do that, we’ll cry buckets of tears over the cost, because inefficiency means high cost.

(Note that the figures provided by Transport and Environment over-state the efficiency of hydrogen and of the engines used in the e-fuels cases- but in jets, a turbofan is likely about as efficient as a fuelcell in terms of thermodynamic work per unit of fuel LHV fed. The point of the figure is to show the penalty you pay by converting hydrogen and CO2 to an e-fuel- the original T&E chart over-stated that efficiency significantly)

Ships? There’s no way in my view that the very bottom-feeders of the transport energy market- used to burning basically liquid coal (petroleum residuum-derived bunker fuel with 3.5% sulphur, laden with metals and belching out GHGs without a care in the world) are going to switch to hydrogen, much less ammonia, with its whopping 11-19% round-trip efficiency.

https://www.linkedin.com/pulse/ammonia-pneumonia-paul-martin/

Heating

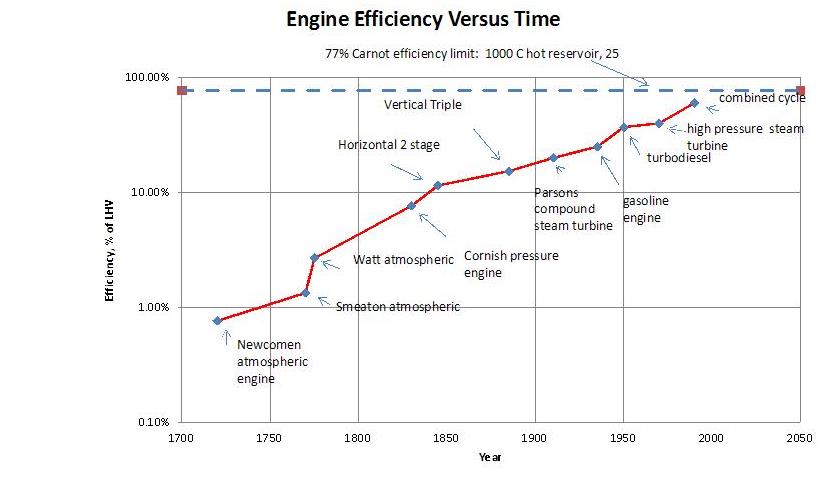

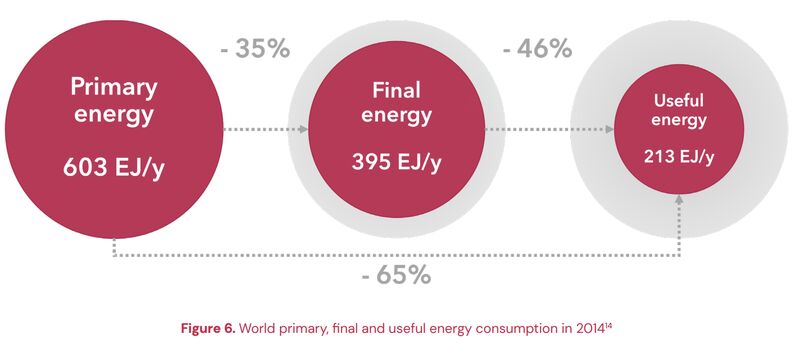

Fundamentally, why do we burn things? To make heat, of course!

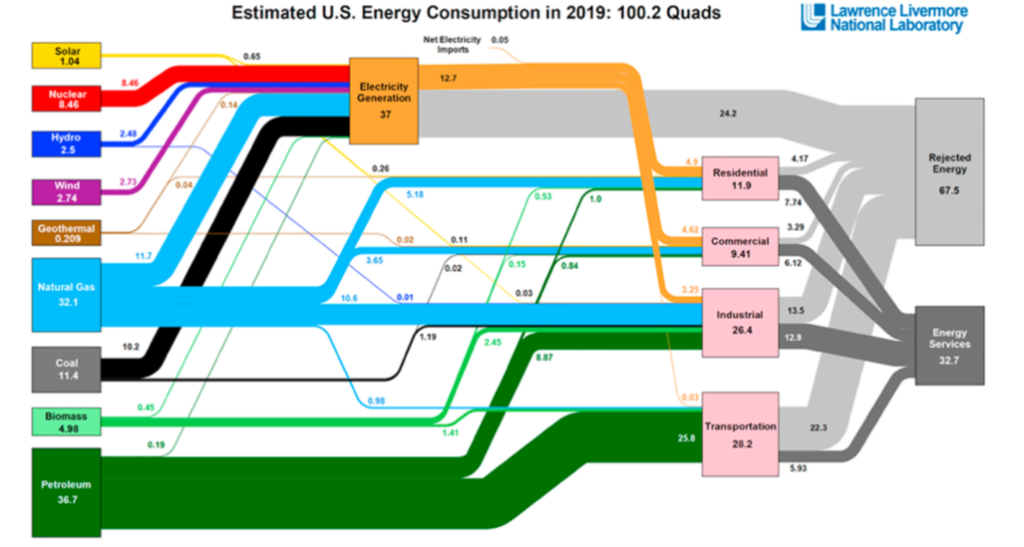

Right now, we burn things to make heat to make electricity. Hence, it is cheaper to heat things using whatever we’re burning to make electricity, than it is to use electricity. Even with a coefficient of performance for a heat pump, so we can pump 3 joules of heat for every joule of electricity we feed, it’s still cheaper to skip the electrical middleman and use the fuel directly, saving all that capital and all those energy losses.

https://www.linkedin.com/pulse/home-heating-electrification-paul-martin/

Accordingly, hydrogen- made from a fuel (methane), is not used as a fuel. Methane is the cheaper option, obviously!

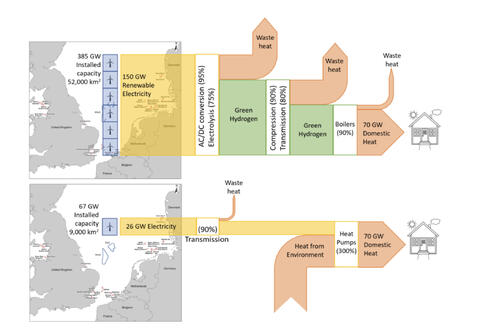

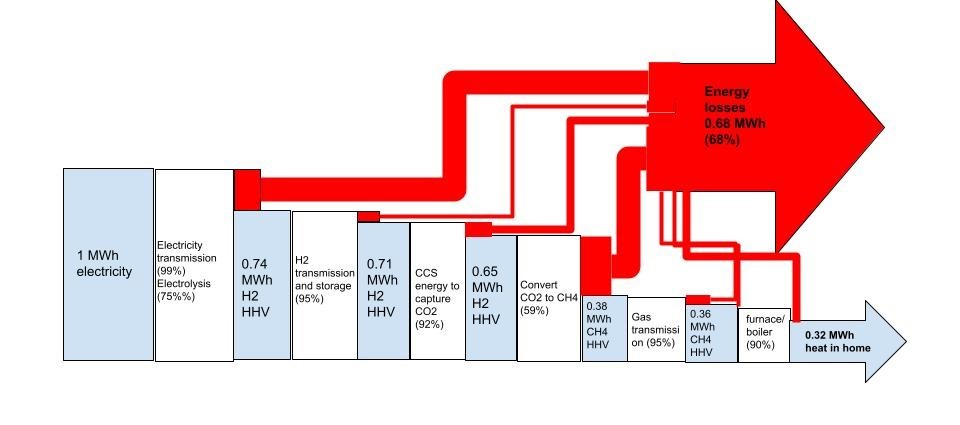

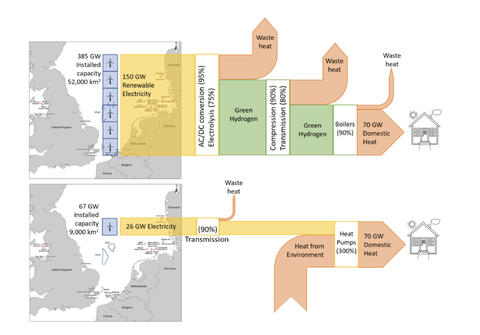

In the future, we’re going to start with electricity made from wind, solar, geothermal etc. And thence, it will be cheaper to use electricity directly to make heat, rather than losing 30% bare minimum of our electricity to make a fuel (hydrogen) from it first. By cutting out the molecular middleman, we’ll save energy and capital. It will be cheaper to heat using electricity.

I know it’s backwards to the way you’re thinking now. But it’s not wrong.

Replacing comfort heating use of natural gas with hydrogen is fraught with difficulties.

https://www.linkedin.com/pulse/hydrogen-replace-natural-gas-numbers-paul-martin/

Hydrogen takes 3x as much energy to move than natural gas, which takes about as much energy to move as electricity. But per unit exergy moved, electricity wins, hands down. Those thinking it’s easier to move hydrogen than electricity are fooling themselves. And those who think that re-using the natural gas grid just makes sense, despite the problems mentioned in my article above, are suffering from the sunk cost fallacy- and are buying a bill of goods from the fossil fuel industry. When the alternative is to go out of business, people imagine all sorts of things might make sense if it allows them to stay in business.

Hydrogen as Energy Storage

We’re going to need to store electricity from wind and solar- that is obvious.

We’re also going to need to store some energy in molecules, for those weeks in the winter when the solar panels are covered in snow, and a high pressure area has set in and wind has dropped to nothing.

It is, however, a non-sequitur to conclude that therefore we must make those molecules from electricity! It’s possible, but it is by no means the only option nor the most sensible one.

https://www.linkedin.com/pulse/hydrogen-from-renewable-electricity-our-future-paul-martin/

…But…Green Hydrogen is Going to Be So Cheap!

No, sorry folks, it isn’t.

The reality is, black hydrogen is much cheaper. And if you don’t carbon tax the hell out of black hydrogen, that’s what you’re going to get.

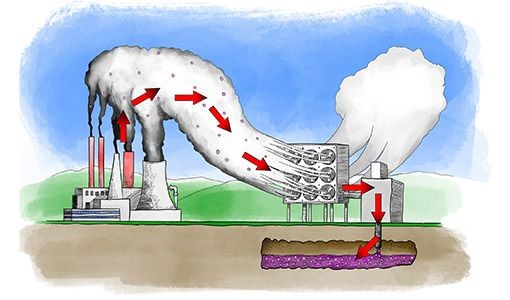

Replacing black hydrogen has to be our focus- our priority- for any green hydrogen we make. But sadly, blue (CCS) hydrogen is likely to be cheaper. Increasing carbon taxes are going to turn black hydrogen into muddy black-blue hydrogen, as the existing users of steam methane reformers (SMRs) gradually start to capture and bury the easy portion of the CO2 coming from their gas purification trains- the portion they’re simply dumping into the atmosphere for free at the moment.

https://onlinelibrary.wiley.com/doi/full/10.1002/ese3.956

There is no green hydrogen to speak of right now. Why not? Because nobody can afford it. It costs a multiple of the cost of blue hydrogen, which costs a multiple of the cost of black hydrogen.

The reality is, you can’t afford either the electricity, or the capital, to make green hydrogen. The limit cases are instructive: imagine you can get electricity for 2 cents per kWh- sounds great, right? H2 production all in is about 55 kWh/kg. That’s $1.10 per kg just to buy the electricity- nothing left for capital or other operating costs. And yet, that’s the current price in the US gulf coast, for wholesale hydrogen internal to an ammonia plant like this one- brand new, being constructed in Texas City- using Air Products’ largest black hydrogen SMR.

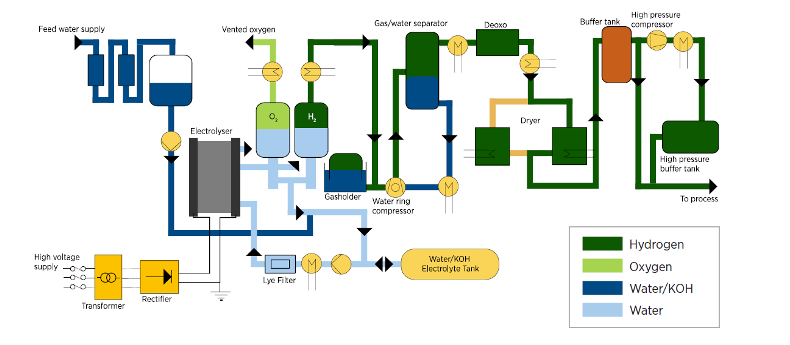

At the other end, let’s imagine you get your electricity for free! But you only get it for free at 45% capacity factor- which by the way would be the entire output of an offshore wind park- about as good as you can possibly get for renewable electricity (solar here in Ontario for instance is only 16% capacity factor…)

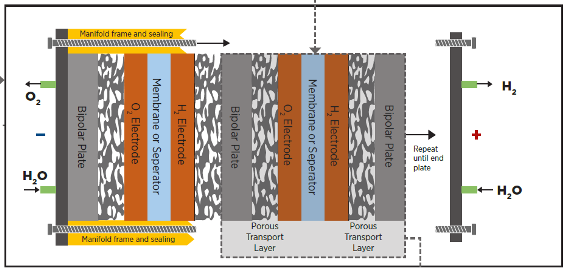

If you had 1 MW worth of electrolyzer, you could make about 200 kg of H2 per day at 45% capacity factor. If you could sell it all for $1.50/kg, and you could do that for 20 yrs, and whoever gave you the money didn’t care about earning a return on their investment, you could pay about $2.1 million for your electrolyzer set-up- the electrolyzer, water treatment, storage tanks, buildings etc.- assuming you didn’t have any other operating costs (you will have). And…sadly…that’s about what an electrolyzer costs right now, installed. And no, your electrolyzer will not last more than 20 yrs either.

Will the capital costs get better? Sure! With scale, the electrolyzer will get cheaper per MW, as people start mass producing them. And as you make your project bigger, the cost of the associated stuff as a proportion of the total project cost will drop to- to an extent, not infinitely.

But the fundamental problem here is that a) electricity is never free b) cheap electricity is never available 24/7, so it always has a poor capacity factor and c) electrolyzers are not only not free, they are very expensive and only part of the cost of a hydrogen production facility.

Can you improve the capacity factor by using batteries? If you do, your cost per kWh increases a lot- and that dispatchable electricity in the battery is worth a lot more to the grid than you could possibly make by making hydrogen from it.

Can you improve the capacity factor by making your electrolyzer smaller than the capacity of your wind/solar park? Yes, but then the cost per kWh of your feed electricity increases because you’re using your wind/solar facility less efficiently, throwing away a bunch of its kWh. And I thought that concern over wasting that surplus electricity was the whole reason we were making hydrogen from it!?!?

John Poljak has done a good job running the numbers. And the numbers don’t lie. Getting hydrogen to the scale necessary to compete with blue much less black hydrogen is going to take tens to hundreds of billions of dollars of money that is better spent doing something which would actually decarbonize our economy.

https://www.linkedin.com/feed/update/urn:li:activity:6761296385645117440/

UPDATE: John’s most recent paper makes it even clearer- the claims being made by green hydrogen proponents of ultra-low costs per kg of H2 are “aspirational” and very hard to justify in the near term. They require a sequence of miracles to come true.

https://www.linkedin.com/feed/update/urn:li:activity:6826148496073207809/

Why Does This Make You So Angry, Paul?

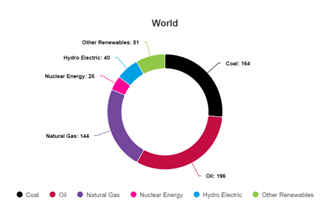

We’ve known these things for a long time. Nothing has changed, really. Renewable electricity is more available, popular, and cheaper than ever. But nothing about hydrogen has changed. 120 megatonnes of the stuff was made last year, and 98.5% of it was made from fossils, without carbon capture. It’s a technical gas, used as a chemical reagent. It is not used as a fuel or energy carrier right now, at all. And that’s for good reasons associated with economics that come right from the basic thermodynamics.

What we have is interested parties muddying the waters, selling governments a bill of goods- and believe me, those parties intend to issue an invoice when that bill of goods has been sold! And that’s leading us toward an end that I think is absolutely the wrong way to go: it’s leading us toward a re-creation of the fossil fuel paradigm, selling us a fossil fuel with a thick obscuring coat of greenwash. That’s not in the interest of solving the crushing problem of anthropogenic global warming:

https://www.linkedin.com/pulse/global-warming-risk-arises-from-three-facts-paul-martin/

Where Does Hydrogen Make Sense?

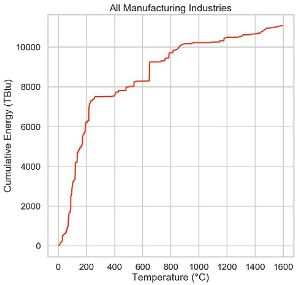

We need to solve the decarbonization problem OF hydrogen, first. Hydrogen is a valuable (120 million tonne per year) commodity CHEMICAL – a valuable reducing agent and feedstock to innumerable processes- most notably ammonia as already mentioned. That’s a 40 million tonne market, essential for human life, almost entirely supplied by BLACK hydrogen right now. Fix those problems FIRST, before dreaming of having any excess to waste as an inefficient, ineffective heating or comfort fuel!!!

Here’s my version of @Michael Liebreich’s hydrogen merit order ladder. I’ve added coloured circles to the applications where I think there are better solutions THAN hydrogen. Only the ones in black make sense to me in terms of long-term decarbonization, assuming we solve the problem OF hydrogen by finding ways to afford to not make it from methane or coal with CO2 emissions to the atmosphere- virtually the only way we actually make hydrogen today.

If Not Hydrogen, Then What?

Here’s my suite of solutions. The only use I have for green hydrogen is as a replacement for black hydrogen- very important so we can keep eating.

https://www.linkedin.com/pulse/what-energy-solutions-paul-martin/

There are a few uses for H2 to replace difficult industrial applications too. Reducing iron ore to iron metal is one example- it is already a significant user of hydrogen and more projects are being planned and piloted as we speak. But there, hydrogen is not being used as a fuel per se- it is being used as a chemical reducing agent to replace carbon monoxide made from coal coke. The reaction between iron oxide and hydrogen is actually slightly endothermic. The heat can be supplied with electricity- in fact arc furnaces are already widely used to make steel from steel scrap.

In summary: the hydrogen economy is a bill of goods, being sold to you. You may not see the invoice for that bill of goods, but the fossil fuel industry has it ready and waiting for you, or your government, to pay it- once you’ve taken the green hydrogen bait.

DISCLAIMER: everything I say here, and in each of these articles, is my own opinion. I come by it honestly, after having worked with and made hydrogen and syngas for 30 yrs. If I’ve said something in error, please by all means correct me! Point out why what I’ve said is wrong, with references, and I’ll happily correct it. If you disagree with me, disagree with me in the comments and we’ll have a lively discussion- but go ad hominem and I’ll block you.